10 Criteria for Evaluating Training Programs

10 criteria for evaluating a training program is a crucial aspect of ensuring its effectiveness and value. This deep dive explores ten key factors to consider when assessing the success of any training initiative. From measuring learning outcomes to analyzing program sustainability, this guide provides a comprehensive framework for evaluating training programs, empowering you to make informed decisions and optimize future training initiatives.

The criteria range from foundational elements like defining evaluation criteria and measuring learning outcomes to more nuanced considerations such as cost-effectiveness and program sustainability. Understanding these critical aspects is vital for creating impactful training experiences that align with organizational goals and participant needs.

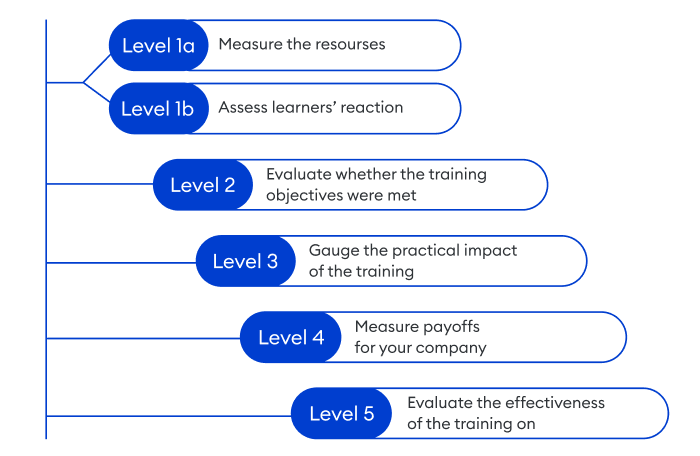

Defining Evaluation Criteria

Evaluating training programs is crucial for ensuring their effectiveness and maximizing the return on investment. A robust evaluation process goes beyond simple satisfaction surveys; it requires a systematic approach that considers multiple facets of the program’s impact. This involves defining specific criteria that measure learning outcomes, program design, and participant engagement, ultimately leading to actionable insights for improvement.Comprehensive evaluation criteria provide a structured framework for assessing the value and impact of training programs.

This allows organizations to identify strengths, pinpoint areas for improvement, and make data-driven decisions about future training initiatives. This process ultimately translates into a more efficient and effective use of resources, leading to better-trained employees and enhanced organizational performance.

Learning Outcomes

Defining learning outcomes is paramount to evaluating a training program’s success. These are measurable statements that describe what participants should know, understand, or be able to do after completing the program. Effective learning outcomes focus on specific skills, knowledge, and behaviors.

- Knowledge Acquisition: Assess the extent to which participants have acquired new information and concepts. This can be measured through quizzes, tests, or case studies. For example, a training program on financial modeling should demonstrate that participants can accurately calculate key financial metrics.

- Skill Development: Evaluate the improvement in participants’ practical skills. This involves observing their performance in real-world situations or through practical exercises. For instance, a training program on customer service should evaluate if participants can effectively handle difficult customer interactions.

- Behavioral Changes: Examine whether the training program has led to observable changes in participants’ behaviors and attitudes. Observation, surveys, and feedback mechanisms can be employed to assess the shift in behaviors. For example, a leadership training program should demonstrate a shift in the participants’ leadership styles towards a more collaborative approach.

Program Design

A well-designed training program is fundamental to its success. Evaluation criteria for program design focus on factors that contribute to a positive learning experience and effective knowledge transfer.

Thinking about the 10 criteria for evaluating a training program? It’s crucial to consider how effective the training is, especially when you’re considering how these programs are implemented at the largest architectural firms 2. largest architectural firms 2 often have unique training needs, and understanding their approaches can help you assess the strengths and weaknesses of different programs.

Ultimately, a well-evaluated program will be a successful program, no matter the size of the firm.

- Curriculum Relevance: Assess the alignment between the training content and the identified needs of the target audience. Does the program directly address the skills gaps and knowledge deficiencies within the organization? This can be measured through surveys and feedback from participants.

- Instructional Methods: Evaluate the effectiveness of the chosen instructional methods. Are they engaging, interactive, and conducive to learning? Observations of the training sessions and participant feedback can provide insights.

- Resource Adequacy: Assess the availability and quality of learning materials, technology, and support systems. Is the program well-resourced to ensure a successful learning experience? This includes assessing the quality of the learning materials and the availability of support resources.

Participant Engagement

Participant engagement is critical for ensuring knowledge retention and application. Evaluating participant engagement provides insights into the program’s overall effectiveness and areas for improvement.

- Attendance and Participation: Track attendance rates and assess participant engagement during the training sessions. High attendance and active participation indicate a positive learning environment. Monitoring attendance and participation can highlight any areas where the training sessions need improvement.

- Feedback and Satisfaction: Collect feedback from participants to understand their satisfaction with the program. Surveys, focus groups, and open-ended feedback mechanisms are essential for gauging their overall satisfaction with the program. This helps to identify any areas where the training could be improved.

- Post-Training Performance: Evaluate the impact of the training on participants’ performance in their roles after the training. Tracking performance metrics after the program helps determine whether the training program has achieved its intended outcomes. This provides valuable insights into the effectiveness of the training and its long-term impact on the participants.

Evaluation Criteria Table

| Category | Criteria |

|---|---|

| Learning Outcomes | Knowledge Acquisition, Skill Development, Behavioral Changes |

| Program Design | Curriculum Relevance, Instructional Methods, Resource Adequacy |

| Participant Engagement | Attendance and Participation, Feedback and Satisfaction, Post-Training Performance |

Measuring Learning Outcomes

Assessing the effectiveness of a training program hinges critically on measuring the learning outcomes achieved by participants. This involves more than just checking attendance; it demands a robust evaluation strategy that captures the knowledge, skills, and attitudes acquired during the training. This crucial step provides valuable feedback for program improvement and ensures the training’s impact is tangible and measurable.

Methods for Assessing Participant Learning

Different methods can be employed to gauge the effectiveness of a training program. These methods range from traditional assessments to modern survey techniques, each with its own strengths and weaknesses. Selecting the right method depends on the specific learning outcomes being targeted and the resources available.

- Assessments: Formal assessments, such as tests, quizzes, and practical exercises, are essential for evaluating knowledge and skills. They offer a structured way to measure specific learning objectives and provide a clear indication of participant proficiency. Examples include multiple-choice questions, short answer responses, and simulations that assess the application of learned skills.

- Surveys: Surveys, including questionnaires and feedback forms, offer valuable insights into participants’ perceptions and attitudes. They can be used to gather information on the overall experience, the relevance of the training material, and the participants’ satisfaction with the program. They provide a more qualitative understanding of the learning process.

- Performance Evaluations: Evaluating participants’ performance on the job after the training provides a practical measure of the transfer of learning. Observing how they apply the acquired knowledge and skills in their daily work is a critical indicator of the training’s effectiveness. This could involve direct observation by supervisors, performance reviews, or analyzing work output metrics.

Types of Learning Outcomes and Measurement

Training programs aim to develop various learning outcomes, including knowledge, skills, and attitudes. Measuring these outcomes effectively requires tailored assessment methods.

- Knowledge: Knowledge outcomes focus on the acquisition of facts, concepts, and principles. Assessments like multiple-choice tests, true/false questions, and short answer questions are suitable for measuring knowledge retention. A critical analysis of case studies can also gauge the ability to apply knowledge in real-world scenarios.

- Skills: Skill development focuses on practical application and proficiency. Methods like practical exercises, simulations, and performance-based tasks are ideal for evaluating skills. Examples include operating machinery, presenting a speech, or performing a technical procedure.

- Attitudes: Attitude assessment examines the development of desired perspectives and values. Surveys, interviews, and observation can help understand changes in attitude after training. Analyzing the participants’ feedback and their interactions during the program are helpful methods.

Comparing Assessment Methods

The following table provides a comparison of different assessment methods, highlighting their strengths and weaknesses.

| Assessment Method | Strengths | Weaknesses |

|---|---|---|

| Tests/Quizzes | Structured, objective, efficient for large groups, readily quantifiable. | May not accurately measure complex skills or critical thinking, potentially limited in assessing application. |

| Surveys/Questionnaires | Gather diverse perspectives, assess perceptions, easy to administer. | Subjectivity in responses, potential for bias, may not reflect actual knowledge or skills. |

| Performance Evaluations | Directly assess skills application in real-world scenarios, tangible evidence of learning transfer. | Time-consuming, resource-intensive, potential observer bias, may not capture all learning outcomes. |

Assessing Program Design and Structure

Evaluating a training program’s design and structure is crucial for ensuring its effectiveness. A well-structured program considers not only the curriculum and materials but also the delivery methods, fostering engagement and maximizing learning outcomes. A thorough evaluation of these elements allows for improvements and adjustments, leading to a more impactful training experience for all participants.The effectiveness of a training program hinges on its ability to adapt to the needs of the learners and provide a supportive environment for knowledge acquisition.

By meticulously examining the curriculum, materials, and delivery methods, we can identify strengths and weaknesses, paving the way for improvements and adjustments to maximize learning outcomes. This approach also involves evaluating program logistics and resource management, ensuring efficient use of time and resources.

Curriculum Evaluation

A comprehensive evaluation of the curriculum involves examining the alignment of course content with learning objectives. The curriculum should cover essential topics and skills relevant to the intended learning outcomes. It’s also important to consider the sequencing of topics and the integration of various learning methodologies to enhance comprehension. Incorporating real-world examples, case studies, and interactive exercises can further solidify understanding.

Material Evaluation

The effectiveness of training materials is paramount. Evaluations should assess the clarity, accuracy, and relevance of the materials to the target audience. Using a variety of media, such as videos, presentations, and interactive exercises, can enhance engagement and understanding. Materials should be readily accessible, easily understandable, and relevant to the learners’ current skill level.

Thinking about the 10 criteria for evaluating a training program? It’s crucial to consider how effective a program is, especially with significant shifts in the industry, like Mondovi will soon be under Emplify Health, mondovi will soon be under emplify health. Analyzing the learning outcomes, participant feedback, and the overall impact on the workforce is key to creating truly impactful training experiences.

These 10 criteria help us determine whether the program aligns with the goals and needs of the employees and the company.

Delivery Method Evaluation

The chosen delivery method plays a significant role in participant engagement and learning outcomes. For example, online courses, in-person workshops, and blended learning approaches each have their own strengths and weaknesses. An effective evaluation considers the suitability of the method for the specific training needs, the target audience, and the available resources. Factors like instructor expertise, technology proficiency, and participant interaction should be assessed.

Evaluating Instructional Strategies

Different instructional strategies can significantly impact participant engagement. For instance, active learning strategies, such as group discussions and problem-solving exercises, often lead to higher levels of engagement and knowledge retention compared to passive learning methods. An effective evaluation should consider how well the chosen strategies align with the learning objectives and cater to the diverse learning styles of participants.

Example of Active Learning Strategy, 10 criteria for evaluating a training program

An example of an active learning strategy is using simulations to demonstrate practical application of the training content. By placing participants in realistic scenarios, simulations allow them to apply their knowledge and develop critical thinking skills in a safe environment. The effectiveness of simulations can be assessed through participant feedback, observations during the exercise, and post-training assessments.

Logistics and Resource Management Framework

A structured framework for evaluating program logistics and resource management ensures efficient use of time and resources. This includes assessing the adequacy of facilities, equipment, and personnel. Factors such as scheduling, budget allocation, and participant support should also be considered. For instance, clear communication channels and timely updates regarding program progress are vital for effective resource management.

Regular reviews and adjustments to the framework are essential for ongoing improvement.

Example of Resource Allocation

A company might allocate more resources to training programs that are expected to yield high returns on investment (ROI). A clear understanding of the budget and available resources can help guide decisions on resource allocation, ensuring the program aligns with the organization’s strategic goals and financial constraints.

Evaluating Participant Engagement and Satisfaction: 10 Criteria For Evaluating A Training Program

Understanding how participants feel about a training program is crucial for its success. Positive experiences foster retention of knowledge and skills, encourage future participation, and ultimately contribute to achieving the program’s objectives. A well-designed training program must not only impart knowledge but also create a positive learning environment. This involves actively seeking feedback from participants to identify areas for improvement and demonstrate a commitment to their growth and development.Participant feedback is not merely a formality; it is a vital tool for program refinement.

Gathering input from those who have directly experienced the training allows for a nuanced understanding of strengths and weaknesses. Constructive criticism from participants reveals blind spots in the program’s design, delivery, and overall impact, ultimately enabling the development of a more effective and engaging learning experience. This feedback loop is critical for iterative improvement, making the training program more valuable for future participants.

Strategies for Gathering Participant Feedback

Collecting feedback effectively is key to understanding participant experiences. A variety of methods can be used, each with its own strengths and weaknesses. By employing a mix of approaches, a comprehensive picture of participant engagement and satisfaction can be painted.

Methods for Collecting Participant Feedback

Gathering feedback effectively is crucial to understanding participant experiences. This involves a multi-faceted approach that accounts for different learning styles and preferences. A balanced strategy is key to understanding participant experiences comprehensively.

| Method | Description | Strengths | Weaknesses |

|---|---|---|---|

| Surveys | Standardized questionnaires with pre-defined questions. | Efficient for collecting large amounts of data, allows for quantitative analysis. | Limited depth of understanding, may not capture nuanced feedback. |

| Focus Groups | Small group discussions facilitated by a moderator. | Provides in-depth qualitative data, allows for exploration of specific themes. | Can be time-consuming, susceptible to groupthink. |

| Interviews | One-on-one conversations with participants. | Highly personalized feedback, allows for probing and clarification. | Time-intensive, may not capture the collective sentiment of the group. |

| Observations | Directly observing participants during training sessions. | Provides insights into participant engagement in real-time. | Can be subjective, may not capture all aspects of the experience. |

| Online forums/discussion boards | Platforms for participants to share their thoughts and experiences. | Provides continuous feedback, allows for ongoing dialogue. | May not be accessible to all participants, can be difficult to moderate. |

Considering Cost-Effectiveness and ROI

A crucial aspect of evaluating any training program is understanding its financial impact. Simply achieving learning objectives isn’t enough; the program must be demonstrably worthwhile in terms of the resources invested. This section dives into the financial analysis required to determine the program’s cost-effectiveness and return on investment (ROI).Evaluating the financial aspects of a training program requires a meticulous approach to identifying, quantifying, and ultimately assessing the return on investment.

This process ensures that the program aligns with the organization’s financial goals and demonstrates a positive impact on the bottom line.

Identifying and Quantifying Training Costs

Understanding the full financial picture of a training program necessitates a clear identification of all associated costs. These costs can be broadly categorized into direct and indirect expenses.

- Direct costs include the instructor’s fees, training materials, venue rental, and any technology required for the training sessions. These are easily identifiable and directly attributable to the program.

- Indirect costs, on the other hand, are more nuanced. These include administrative expenses like scheduling, participant travel, and any lost productivity during the training period. Accurate accounting of these indirect costs is often overlooked but essential for a complete picture of the financial investment.

Calculating Return on Investment (ROI)

The ROI calculation provides a standardized metric for evaluating the profitability of the training program. A positive ROI indicates that the training program generates more value than the cost incurred.

| Component | Description | Calculation |

|---|---|---|

| Training Costs | Total direct and indirect costs associated with the training program. | Sum of all direct and indirect costs. |

| Increased Productivity | Quantifiable gains in productivity resulting from the training program. This could be measured by improved output, reduced errors, or faster task completion. | (New productivity level – Old productivity level)

|

| Reduced Errors | Quantifiable reduction in errors or mistakes resulting from the training program. | (Old error rate – New error rate)

|

| Improved Quality | Quantifiable improvements in product or service quality attributable to the training program. | (New quality metric – Old quality metric)

|

| Return on Investment (ROI) | The percentage of return on the investment made in the training program. | [(Increased Productivity + Reduced Errors + Improved Quality)

|

ROI = [(Increased Value)

- (Training Costs)] / (Training Costs)

- 100%

A crucial step in calculating ROI is accurately quantifying the “increased value” generated by the training program. This might include improvements in employee performance, reduced errors, or enhanced customer satisfaction. It’s important to establish clear metrics to track these improvements before, during, and after the training program.

Analyzing Program Implementation and Sustainability

Evaluating a training program isn’t just about measuring initial learning; it’s crucial to understand how the program is put into practice and whether its benefits endure. This involves examining the entire implementation process, from initial planning to ongoing support, and assessing the program’s ability to create lasting change within participants and the organization. This ensures the program effectively achieves its intended goals and remains valuable over time.A thorough evaluation of implementation and sustainability uncovers potential weaknesses, allowing for course correction and improvement.

Identifying challenges early on helps tailor future programs, ensuring greater efficiency and impact. Understanding the program’s long-term effects on participants and the organization provides a clear picture of its overall value and its return on investment.

Evaluating the Implementation Process

Careful consideration of the implementation process is vital for a comprehensive evaluation. This includes reviewing the initial planning stages, identifying potential roadblocks, and understanding the challenges faced during execution. Tracking progress against milestones, observing the actual delivery of training materials, and assessing the quality of instructor interactions are all key aspects. Effective implementation often involves clear communication channels, adequate resources, and effective training materials.

Identifying Potential Challenges and Obstacles

Implementation challenges can arise from various factors. Resource constraints, lack of support from management, inadequate training materials, or resistance from participants can significantly impact the program’s success. Recognizing these potential obstacles allows for proactive strategies to mitigate risks. For example, if funding is limited, alternative funding sources should be explored. If management support is lacking, strategies to gain buy-in should be implemented.

Thinking about 10 criteria for evaluating a training program? It’s crucial to assess the effectiveness of any program, especially when you see the impact firsthand, like with the recent ceremony honoring dozens of graduates at a transformational leadership ceremony. This event highlights the potential of these programs. Ultimately, thorough evaluation of the training program is key to ensuring continued growth and positive outcomes for future cohorts.

Evaluating Program Sustainability

The sustainability of a training program depends on its ability to produce lasting behavioral changes within participants. This goes beyond just immediate knowledge acquisition; it examines how the program’s principles translate into practical application in the workplace. Ensuring ongoing support through follow-up activities, mentorship programs, and continuous reinforcement is critical for sustainable learning. Regular check-ins, feedback sessions, and monitoring progress against established metrics can provide valuable insights into the program’s impact over time.

Measuring Ongoing Impact on Participants and the Organization

Measuring the ongoing impact requires establishing clear metrics that align with the program’s objectives. Tracking changes in employee performance, improved efficiency, or positive shifts in team dynamics provides evidence of the program’s value. Data collection strategies should incorporate surveys, interviews, performance evaluations, and observation to obtain a comprehensive view of the program’s long-term effects. For instance, evaluating the frequency of participants applying learned skills in their daily work tasks can provide strong evidence of program effectiveness.

Addressing Specific Training Needs

Tailoring training programs to meet specific needs is crucial for maximizing impact and ensuring that employees acquire the skills and knowledge required for their roles and organizational goals. A well-designed training program that aligns with the particular requirements of a team or department can significantly improve performance and efficiency. This involves understanding the existing skill gaps and tailoring the program to address them effectively.Effective training programs go beyond generic knowledge dissemination.

They focus on the unique needs of the individuals or groups receiving the training. This approach not only enhances learning but also increases the likelihood of successful application of the learned skills in the workplace.

Thinking about 10 criteria for evaluating a training program? It’s crucial to consider the impact, isn’t it? For instance, how about a sweet treat to fuel your evaluation process? Check out Weston’s new candy shop on Avenue 117, taste buds dance at Weston’s new Avenue 117 candy , a great place to sample some deliciousness while you brainstorm those key performance indicators.

Ultimately, evaluating training programs needs a structured approach, considering factors like cost-effectiveness and learner engagement.

Adapting Evaluation Criteria to Specific Training Needs

Evaluation criteria should be adjusted to reflect the specific training goals and objectives. This adaptation is critical to ensure the evaluation accurately assesses the program’s effectiveness in addressing those needs. Criteria should directly measure the skills and knowledge relevant to the training’s intended outcomes.

Examples of Tailored Evaluation Criteria

Different industries, roles, and learning objectives necessitate distinct evaluation criteria. For instance, a sales training program for a retail environment might focus on customer interaction skills, sales techniques, and product knowledge. The evaluation would include role-playing scenarios, product knowledge assessments, and sales performance data analysis. Conversely, a leadership training program for managers could evaluate their ability to delegate tasks, provide constructive feedback, and foster team collaboration.

This would involve observation of leadership behaviors in real-world situations, feedback from team members, and self-assessments.

Identifying Aspects of Training Needs

Understanding the nuances of training needs is crucial for developing appropriate evaluation criteria. Consider factors such as the current skill level of participants, the specific knowledge gaps to be addressed, and the desired performance outcomes. For example, if the training aims to improve data analysis skills, the evaluation criteria must assess the ability to interpret data, draw conclusions, and apply analytical techniques.

Evaluating Training Program Suitability

The suitability of a training program to specific needs should be evaluated through several key aspects. These include the alignment of the training content with the identified skill gaps, the relevance of the learning methodologies to the target audience, and the overall effectiveness of the program in achieving the desired outcomes. For example, a training program focused on project management skills should include practical exercises, real-world case studies, and opportunities for peer-to-peer learning to reflect the diverse complexities of project work.

The evaluation should assess if these methods effectively address the identified project management skill gaps within the company.

Considering Technology Integration

Technology is rapidly transforming the training landscape. Integrating technology effectively within training programs can significantly enhance learning outcomes, engagement, and overall program effectiveness. From interactive simulations to online assessments, technology offers numerous avenues for delivering and evaluating training content. Evaluating this integration is crucial to ensure the chosen technologies align with learning objectives and contribute positively to the training experience.Evaluating the technology integration within a training program requires a multifaceted approach that considers various aspects of the digital tools and resources employed.

This involves assessing not only the technical functionality but also the user experience, learner engagement, and the alignment of technology with learning objectives. Effective evaluation strategies will provide valuable insights into the program’s strengths and weaknesses, allowing for necessary adjustments to maximize learning and improve future programs.

Evaluating Online Training Platforms

Online training platforms play a pivotal role in modern learning environments. To evaluate their effectiveness, consider factors like ease of navigation, platform responsiveness, and user interface design. Accessibility and compatibility with diverse devices are also critical. User feedback, gathered through surveys and focus groups, is essential to understand the platform’s strengths and areas needing improvement. A thorough evaluation should also examine the platform’s features and functionalities, including learning management system (LMS) capabilities, communication tools, and content delivery mechanisms.

Evaluating E-Learning Tools

E-learning tools are essential for interactive learning experiences. The effectiveness of these tools depends on their ability to engage learners and facilitate knowledge retention. Factors to consider include the interactivity of the content, the variety of learning activities, and the accessibility of multimedia resources. Assessment of e-learning tools should also consider the platform’s capability to track learner progress and provide personalized feedback.

Interactive simulations and gamified learning activities are examples of effective e-learning tools that can be evaluated for their effectiveness in knowledge transfer.

Evaluating Digital Resources

Digital resources are indispensable for supplementing and enhancing training programs. Their effectiveness hinges on factors like accuracy, currency, and relevance to the training objectives. A critical evaluation process will assess the reliability and credibility of these resources. This evaluation should also include accessibility considerations, ensuring that the resources are usable by learners with varying technological skills and access levels.

Evaluation of digital resources could also include metrics for time spent on each resource, frequency of use, and learner engagement with the content.

Technology Integration Evaluation Methods

Various methods can be used to evaluate technology integration within a training program. These methods often involve gathering data and feedback from stakeholders.

- Usability Testing: Usability testing involves observing learners interacting with the online platform or e-learning tools. This method helps identify areas where the technology is difficult to navigate or use effectively. Usability testing can provide insights into the intuitive nature of the design and potential issues related to user flow. Observational data gathered from usability testing can reveal usability challenges and areas where the technology can be improved.

- User Feedback Analysis: Collecting and analyzing user feedback through surveys, questionnaires, and interviews is crucial for understanding learners’ perspectives on the technology used in the training program. This feedback can provide valuable insights into the technology’s effectiveness, identifying aspects that are well-received and areas requiring improvement. Qualitative feedback can offer valuable contextual insights that quantitative data alone might miss.

Creating a Comprehensive Evaluation Report

A well-structured evaluation report is crucial for understanding the effectiveness of a training program and identifying areas for improvement. It serves as a valuable tool for demonstrating the program’s impact, justifying future investments, and ensuring continuous enhancement. This report goes beyond simply summarizing data; it provides actionable insights to optimize the program’s design, delivery, and overall impact.Thorough evaluation requires compiling and analyzing data from various sources, including participant feedback, performance assessments, and cost analysis.

A comprehensive report distills this information into a clear, concise narrative that showcases the program’s strengths and weaknesses. This report becomes a critical reference point for stakeholders, informing decisions about future training initiatives.

Compiling and Organizing Evaluation Data

To create a robust report, systematically gather and organize data from different evaluation sources. This includes collecting pre- and post-training assessments, participant surveys, feedback forms, and observations of program implementation. Data should be meticulously recorded and stored in a consistent format to ensure accuracy and facilitate analysis. Employing spreadsheet software or dedicated evaluation platforms can streamline this process, enabling efficient data management.

Identifying Key Findings and Recommendations

The report should highlight key findings that emerge from the evaluation data. These findings could include insights into areas of strength, such as high levels of participant engagement, or areas needing improvement, such as low satisfaction scores in specific modules. Analysis should focus on patterns and trends in the data to identify meaningful conclusions. Recommendations for improvement should be directly linked to the identified findings, providing specific suggestions for enhancing the training program.

For example, if participant feedback suggests confusion regarding a particular module, the report should recommend revisions to the module’s content or delivery method.

Structuring the Evaluation Report

A clear and organized structure ensures the report is easily digestible and actionable. A common structure includes an executive summary, introduction, methodology, data analysis, key findings, recommendations, and conclusions. The executive summary provides a concise overview of the entire report, while the introduction contextualizes the evaluation and its purpose. The methodology section details the methods used for data collection and analysis.

Thinking about those 10 criteria for evaluating a training program? It’s crucial to ensure the training is actually effective, but also consider the practical aspects like staying on top of your office packaging and shipping supplies costs. Staying on top of your office packaging shipping supplies costs can be surprisingly complex, and it’s a factor that can influence the ROI of any training program.

Ultimately, these 10 criteria help you choose training that’s both beneficial and budget-friendly.

The data analysis section presents the findings in a clear and concise manner, using tables, graphs, and other visual aids to support the narrative. The recommendations section should Artikel actionable steps to improve the program. Finally, the conclusion summarizes the evaluation’s key takeaways and reinforces the importance of continuous improvement.

| Section | Content Description |

|---|---|

| Executive Summary | Concise overview of the entire report, highlighting key findings and recommendations. |

| Introduction | Contextualizes the evaluation and its purpose. |

| Methodology | Details the methods used for data collection and analysis. |

| Data Analysis | Presents findings clearly, using visuals to support the narrative. |

| Key Findings | Highlights significant patterns and trends in the data. |

| Recommendations | Artikels actionable steps to improve the program. |

| Conclusion | Summarizes key takeaways and reinforces the importance of continuous improvement. |

Ensuring Validity and Reliability of Evaluations

Evaluating training programs effectively hinges on the validity and reliability of the methods used. A robust evaluation process ensures that the findings accurately reflect the program’s impact and are consistent across different assessments. Without these crucial elements, conclusions drawn from the evaluation may be misleading, leading to wasted resources and ineffective future training initiatives.A critical aspect of high-quality training program evaluation is the meticulous design and implementation of assessment methods.

This involves establishing clear criteria, selecting appropriate tools, and ensuring consistent application throughout the evaluation process. The use of standardized instruments and procedures minimizes biases and maximizes the objectivity of the evaluation results.

Establishing Evaluation Validity

Validity in evaluation refers to the extent to which an evaluation accurately measures what it intends to measure. A valid evaluation ensures that the assessment tools and procedures accurately reflect the learning objectives and the intended outcomes of the training program. This involves careful consideration of the different types of validity, including content validity, criterion validity, and construct validity.

- Content Validity: This type of validity focuses on whether the evaluation instrument adequately represents the content and scope of the training program. A thorough review of the training curriculum and learning objectives is essential to ensure that all critical aspects are covered in the evaluation.

- Criterion Validity: This form of validity assesses the relationship between the evaluation results and external criteria. For instance, comparing training program outcomes to performance on job-related tasks or pre- and post-training knowledge tests can establish criterion validity. If there is a strong correlation, it indicates that the evaluation is measuring what it intends to measure.

- Construct Validity: This type of validity examines whether the evaluation accurately measures the underlying theoretical constructs or concepts related to the training program. For example, if the training aims to improve teamwork skills, the evaluation should accurately assess changes in collaborative behaviors and communication patterns among participants.

Ensuring Evaluation Reliability

Reliability in evaluation refers to the consistency and stability of the evaluation results. A reliable evaluation produces similar results when repeated under similar conditions. Ensuring reliability is crucial to minimizing errors and maximizing the consistency of the evaluation process.

- Test-Retest Reliability: This method involves administering the same evaluation instrument to the same group of participants on two separate occasions. A high correlation between the results of the two administrations indicates good test-retest reliability. This technique is especially important for evaluating knowledge retention over time.

- Inter-Rater Reliability: If multiple evaluators are involved, inter-rater reliability assesses the consistency of their judgments. This is crucial for qualitative evaluations, such as observations of participant behavior or feedback forms. Establishing clear criteria and guidelines for observation and evaluation will minimize discrepancies among raters.

- Internal Consistency Reliability: This type of reliability assesses the consistency of different parts of the same evaluation instrument. For instance, if a questionnaire has multiple questions measuring the same construct, internal consistency reliability ensures that these questions are measuring the same thing. Cronbach’s alpha is a common statistical measure used to determine internal consistency.

Maintaining Evaluation Quality and Consistency

Consistent evaluation procedures contribute significantly to the overall validity and reliability of the results. Maintaining a standardized approach minimizes biases and errors.

- Standardized Procedures: Developing a clear and comprehensive evaluation plan, outlining all steps and procedures, is essential. This plan should include detailed instructions for data collection, analysis, and reporting.

- Trained Evaluators: Providing training and support to evaluators ensures consistency in data collection and interpretation. This minimizes the impact of personal biases and errors in data collection.

- Rigorous Data Analysis: Using appropriate statistical methods and techniques for data analysis ensures that the evaluation results are accurate and reliable. Using established and well-vetted statistical tools minimizes potential errors in analysis and interpretation.

Closure

In conclusion, evaluating a training program is a multifaceted process that requires careful consideration of various criteria. By thoroughly assessing learning outcomes, program design, participant engagement, cost-effectiveness, and implementation, organizations can ensure the training programs they invest in are truly impactful and valuable. This framework will empower you to design and deliver training programs that effectively meet the needs of your learners and contribute to the success of your organization.

Answers to Common Questions

What if a training program doesn’t meet the initial objectives?

If a training program falls short of its objectives, a thorough review of the program design, delivery methods, and participant engagement is essential. Analyze the reasons for the shortfall and use this information to adjust future training programs for better alignment with the desired outcomes.

How can I ensure the validity and reliability of the evaluation process?

Ensuring validity and reliability involves using appropriate evaluation methods, ensuring clear criteria, and maintaining consistency throughout the evaluation process. Rigorous methodology, consistent data collection, and transparent reporting are key to ensuring accurate and trustworthy results.

How can I tailor the evaluation criteria to specific training needs?

Tailoring criteria involves identifying the specific learning objectives and desired outcomes of the training. Adapt the evaluation measures to align with these needs, considering factors like industry, roles, and learning styles. A flexible approach is crucial to ensure relevance and impact.

What are some common pitfalls to avoid when evaluating training programs?

Common pitfalls include overlooking participant feedback, neglecting cost-benefit analysis, and not considering the long-term impact of the program. Thorough planning, consistent data collection, and open communication are essential to avoid these issues.